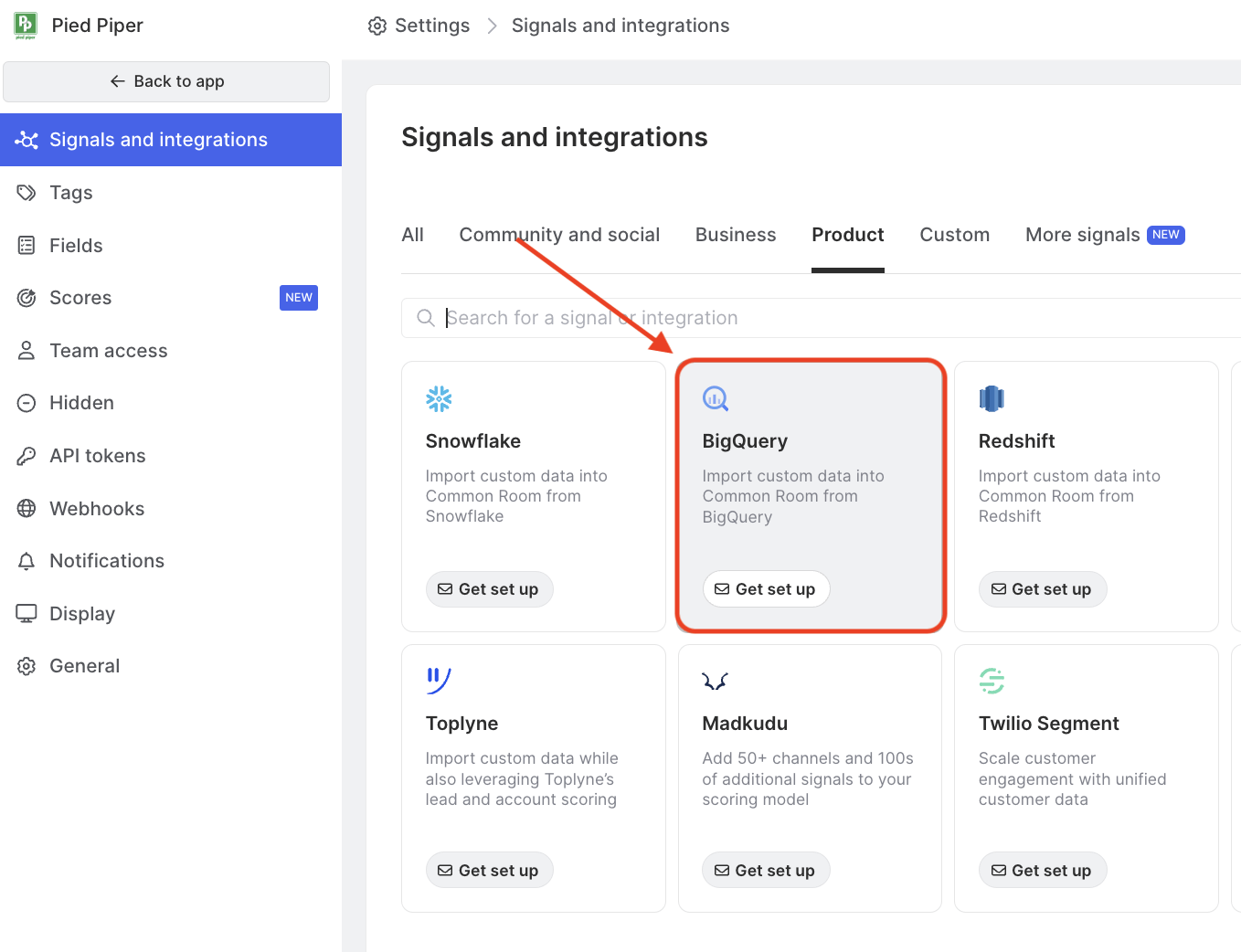

BigQuery/GCS Integration Guide

Last updated Apr 9th, 2025

Overview

Common Room supports importing data from BigQuery via GCS. Importing data via GCS may be suitable for you if you're interested in setting up a recurring import of data from your warehouse into Common Room.

Setup

When setting up your BigQuery integration via GCS, we recommend that you create a new GCS bucket with appropriate permissions so you can have full control over the life cycle of the data. Setting up the connection involves the following steps:

- [Customer] Create your GCS bucket.

- [Customer] Share the bucket with the Common Room GCP service account (shown in the Pulumi code block, below) with roles/storage.objectCreator permissions.

- [Common Room] Validate that data can be read from the bucket using test data.

- [Common Room + Customer] Set up the recurring imports by following our general configuration instructions.

To help make the bucket setup as easy as possible, here is a sample Pulumi snippet that can be used to set up the GCS bucket.

import * as pulumi from "@pulumi/pulumi";

import * as gcp from "@pulumi/gcp";

const COMMON_ROOM_GCS_SERVICE_ACCOUNT_EMAIL =

"common-room-gcs-access@common-room-production.iam.gserviceaccount.com";

const READ_DATA_PREFIX: string | undefined = "READ_PREFIX"; // this can be undefined if no data imports are configured

// Create a GCS bucket, choose your own bucket name

const bucket = new gcp.storage.Bucket("common-room-shared-bucket", {

location: "US",

});

if (READ_DATA_PREFIX != null) {

// Allows Common Room to read and write to the prefix

new gcp.storage.BucketIAMMember(`common-room- access-${READ_DATA_PREFIX}`, {

bucket: bucket.name,

role: "roles/storage.objectViewer",

member: `serviceAccount:${COMMON_ROOM_GCS_SERVICE_ACCOUNT_EMAIL}`,

condition: {

title: "ReadPrefixReadAccess",

expression: `resource.name.startsWith("projects/_/buckets/${bucket.name}/objects/${READ_DATA_PREFIX}/")`,

},

});

new gcp.storage.BucketIAMMember(

`common-room-access-write-${READ_DATA_PREFIX}`,

{

bucket: bucket.name,

role: "roles/storage.objectCreator",

member: `serviceAccount:${COMMON_ROOM_GCS_SERVICE_ACCOUNT_EMAIL}`,

condition: {

title: "ReadPrefixWriteAccess",

expression: `resource.name.startsWith("projects/_/buckets/${bucket.name}/objects/${READ_DATA_PREFIX}/")`,

},

}

);

}

export const bucketName = bucket.name;

export const serviceAccountEmail = serviceAccount.email;Please contact us if you're interested in exploring this option and have any questions!

Requirements

Our GCS integration is included on Enteprise plans and is available as an add-on for Team plans. Please work with your Common Room contact for more information.

FAQ

BigQuery -> GCS export breaks files up if they are larger than 1GB. Can Common Room handle multiple files for a given object?

Yes, our import will handle multiple files as long as they are grouped under the same dated path